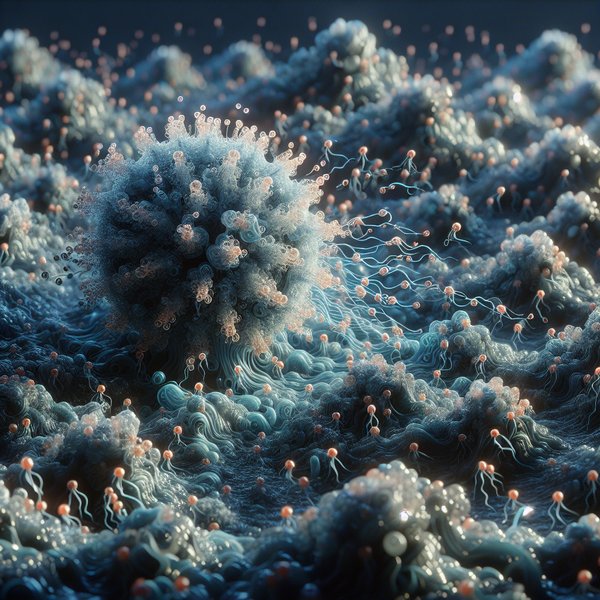

Small planktonic organisms and autonomous robots operating in the ocean often face the challenge of navigating through fluid flows using only on-board sensor data. In recent years, the field of fluid mechanics has increasingly turned to reinforcement learning techniques to address this issue effectively. However, the effectiveness of the strategies learned by these algorithms remains uncertain.

A recent study conducted a quantitative evaluation of reinforcement learning methods in the context of navigating in partially observable flows. The research introduced a well-defined navigation problem with a known quasi-optimal policy and examined the performance of commonly used algorithms like Q-Learning and Advantage Actor Critic in various flow scenarios such as Taylor-Green vortices, Arnold-Beltrami-Childress flow, and two-dimensional turbulence. The results revealed the subpar performance and lack of robustness of these algorithms compared to the more advanced Proximal Policy Optimization (PPO) algorithm.

The study showcased that PPO, with enhancements including vectorized environments, generalized advantage estimation, and hyperparameter optimization, outperformed other algorithms and even matched the theoretically optimal performance in turbulent flows. This success underscores the significance of algorithm selection, implementation intricacies, and fine-tuning in developing intelligent autonomous navigation strategies in complex fluid environments.

Ultimately, the study highlights the critical role that advanced algorithms like PPO play in achieving efficient navigation in dynamic fluid flows and emphasizes the importance of implementing additional techniques to enhance performance and robustness. It serves as a valuable contribution to the ongoing efforts to enhance autonomous navigation capabilities in challenging environments.