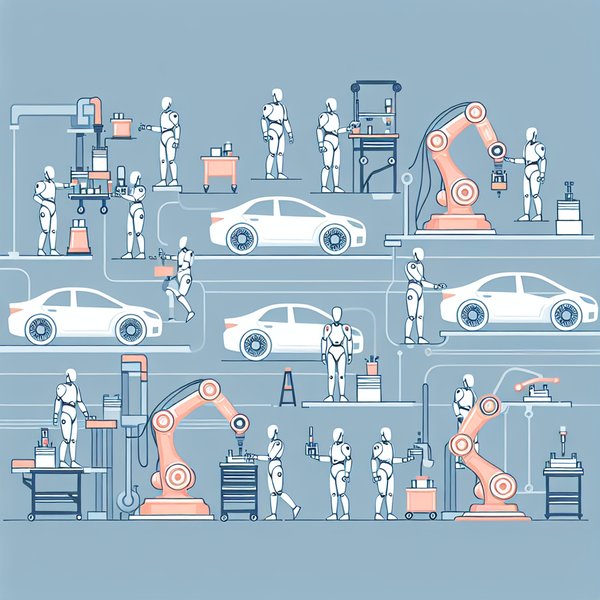

Our team values every piece of feedback provided by our users, taking each input seriously to improve our services. For detailed information on all available qualifiers, please refer to our documentation. OpenMind introduces OM1, a modular AI runtime designed to empower developers in creating and deploying versatile AI agents on a range of digital platforms and physical robots. These include Humanoids, Phone Apps, websites, Quadrupeds, and educational robots like TurtleBot 4.

The OM1 agents are capable of processing various inputs such as web data, social media content, camera feeds, and LIDAR readings. They also facilitate physical actions like motion, autonomous navigation, and engaging in natural conversations. The primary objective of OM1 is to simplify the development of highly capable robots focused on human interactions, allowing for easy upgrades and reconfigurations to suit different physical forms.

To kick off your OM1 experience, you can start by running the Spot agent, which utilizes your webcam to identify and label objects. The labeled information is then passed to the LLM, which generates movement, speech, and facial action commands. These commands are displayed on WebSim along with necessary timing and debugging details. To begin, ensure you have the required uv package manager installed on your system.

To connect OM1 to your robot hardware, refer to our getting started guide for assistance. Keep in mind that the provided agent configuration is just an example. If you wish to interact with the agent and witness its functionalities, ensure that ASR (Automatic Speech Recognition) and TTS (Text-to-Speech) are appropriately configured in the spot.json5 file.

OM1 anticipates that the robot hardware will support a high-level SDK capable of receiving elemental movement and action commands such as backflip, run, pick up the red apple gently, move(0.37, 0, 0), and smile. If your hardware lacks a suitable HAL (hardware abstraction layer), traditional robotics approaches like reinforcement learning in combination with simulation environments, sensors such as ZED depth cameras, and custom VLAs will be necessary for creating one.

OM1 seamlessly interfaces with various HALs using USB, serial connections, ROS2, CycloneDDS, Zenoh, or websockets. For guidance on integrating with a humanoid HAL, you can refer to Unitree’s C++ SDK example. OM1’s versatility extends to compatibility with platforms like Windows and microcontrollers such as the Raspberry Pi 5 16GB. Exciting features like full autonomy mode are in the pipeline, enabling services to operate seamlessly without manual intervention.

For further information on running services and configuring API keys, detailed documentation can be accessed at docs.openmind.org. Before submitting any contributions, please familiarize yourself with our Contributing Guide. Our project is licensed under the MIT License, offering users the freedom to utilize, modify, and distribute the software. The MIT License is recognized for its simplicity and flexibility, encouraging collaboration and widespread use of the software. Stay tuned for updates on our upcoming BOM details and DIY instructions.