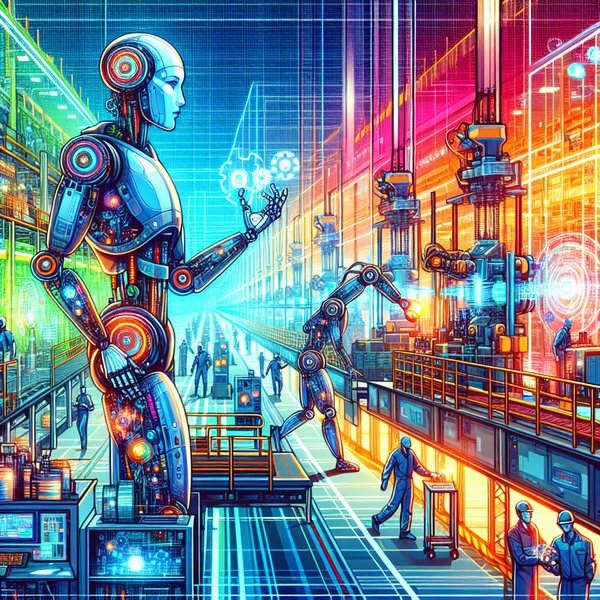

Google DeepMind has introduced two groundbreaking AI models that empower robots to execute complex, multistep tasks unlike ever before. The new models, Gemini Robotics 1.5 and Gemini Robotics-ER 1.5, build upon the initial iteration, allowing robots to tackle long-horizon tasks and enhance their capabilities in assisting humans across various real-world scenarios.

Previously, the AI model was capable of basic instructions like placing a banana in a basket. With the updated models, robots can now sort different fruits based on color into individual containers. In a demonstration, Google’s Aloha 2 robot efficiently sorted a banana, an apple, and a lime onto plates of corresponding colors while providing a natural language explanation of its actions. This advancement marks a major step towards enabling humanoid robots to perform more intricate daily tasks seamlessly.

The interaction between Google Robotics-ER 1.5 (the “brain”) and Google Robotics 1.5 (the “hands and eyes”) mirrors a supervisor-worker relationship. The former, a vision-language model, processes information about objects, interprets commands, and sends instructions to the latter, a vision-language-action model, which executes the tasks while providing feedback on its reasoning process. The synergy between the two models allows for efficient completion of tasks, even utilizing external resources like Google Search when needed.

One remarkable aspect of the new models is their ability to learn and apply knowledge across diverse robotics systems. This generalized approach enables robots to adapt and evolve based on insights gained from various systems, promoting versatility and efficiency. The models’ generalized reasoning equips robots with a broad understanding of the physical world, enabling them to solve problems methodically by breaking them down into manageable steps.

The Gemini Robotics Team emphasized the significance of general-purpose robots possessing advanced reasoning and dexterous control to navigate complex tasks. The models’ ability to approach challenges with a comprehensive understanding of physical spaces and interactions, coupled with their adaptability to different scenarios, distinguishes them from earlier, more specialized approaches. Real-world applications, such as sorting clothes by color, highlight the models’ capability to adapt to changing environments and successfully accomplish tasks with agility and precision.