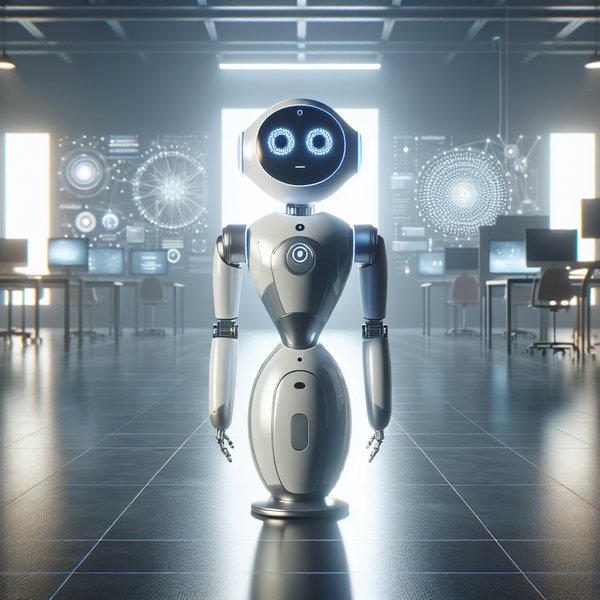

The doctoral thesis discussed in this study focuses on the challenge of developing emotionally intelligent and multilingual conversational systems that can operate in real-time and physical scenarios. The increasing presence of social robots in healthcare, education, and assistive contexts highlights the need for conversational agents that can understand and respond to human emotions across various languages and cultural backgrounds. Traditional dialogue systems often struggle to handle emotional complexity, sustain engagement, and adapt to multilingual environments.

This research introduces a unified framework that combines Large Language Models, Reinforcement Learning, and Fuzzy Logic to facilitate emotional, multilingual, and physically embodied Human-Robot Interaction. The thesis aims to achieve three primary objectives. Firstly, to explore the creation and assessment of multilingual dialogue resources with emotional annotations, emphasizing diversity, realism, and coherence. Secondly, to develop a dialogue management system for multilingual interactions that incorporates contextual and emotional cues in generating responses. This includes designing an emotion-aware conversational agent to evaluate emotional alignment, foster empathetic engagement, and deliver contextually relevant responses.

Thirdly, the study aims to design, implement, and evaluate the complete system within social robots, employing interpretable emotional reasoning based on Fuzzy Logic and leveraging multimodal inputs such as speech, touch, light, and physiological data. The research findings are categorized into three significant contributions. Firstly, a unique method for generating emotional dialogue datasets in English and Spanish is introduced, employing Chain-of-Emotion prompts and aligning AI-human preferences to train robust models. Secondly, an emotionally sensitive and multilingual dialogue architecture is implemented, which integrates Supervised Fine-Tuning, optimization-based Reinforcement Learning, and hierarchical tracking of topics and emotions. Thirdly, a Fuzzy Logic Systems-driven emotional model is extended and integrated into physical robots to enable real-time emotional reasoning and expressive behavior through structured mappings of stimuli-state-expression.

The outcomes from the dialogue management system demonstrate the effective integration of emotional and contextual information within the hierarchical architecture to generate coherent and emotionally aligned responses in multiple languages. The system underwent deployment on two robotic platforms and was evaluated through both simulations and real-world interactions. The results indicate that the proposed models successfully generated emotionally aligned responses, facilitated bilingual dialogues, and maintained consistent internal emotional states that influenced the expressive output. User studies validated improved engagement and emotional perception.

In conclusion, this thesis contributes a modular and interpretable framework for developing emotionally intelligent and multilingual conversational agents. The emotional model proposed encompasses 43 fuzzy rule tables and 17 fuzzy variables across various emotional state dimensions. Furthermore, an emotionally aligned dialogue dataset with AI feedback was developed, comprising 128,125 winner-loser preference pairs, to train emotional models using Reinforcement Learning for producing emotionally engaging responses. The system was validated through a user study involving 66 human participants, with recognition rates of the robot’s emotional expression reaching up to 72.7% across neutral, positive, and negative conditions. By incorporating synthetic emotional data generation, emotionally aware model training, and embodied emotional reasoning, the system advances the progress of scalable and human-aligned social robots ready for real-world deployment in sensitive domains.