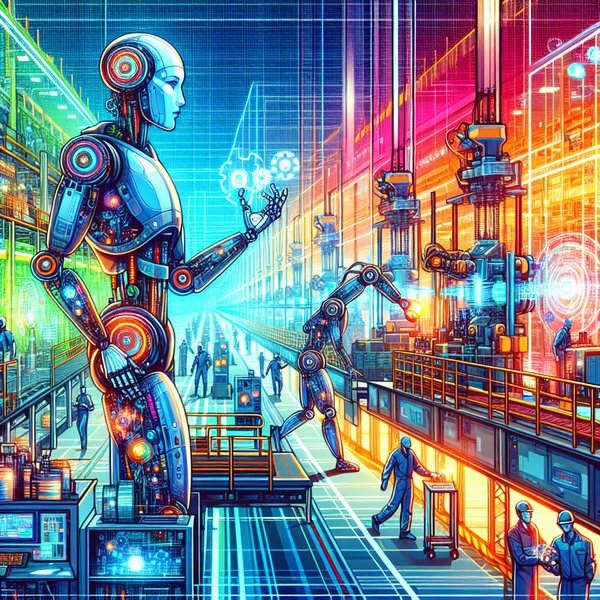

Transform your TurboPi into an intelligent robot by integrating multimodal AI capabilities for natural language commands, scene understanding, and smart task planning. The TurboPi, powered by a Raspberry Pi 5 and a dedicated AI voice module, is evolving from a simple rover into a versatile platform for exploring embodied AI.

Traditional educational robots typically operate within predefined boundaries set by their programmed functions. However, the enhanced TurboPi breaks free from this limitation. By leveraging cloud-based multimodal models like Qwen or DeepSeek, the TurboPi gains access to an extensive knowledge base and reasoning engine. This advancement empowers the TurboPi to:

Upon receiving a voice command like, “Follow the black line and patrol my desk. Tell me if you see a blue cube,” the TurboPi’s integrated AI pipeline springs into action:

1. **Speech Understanding & Task Decomposition:** The onboard audio module captures and converts the speech to text. A cloud-based Large Language Model (LLM) identifies the core intent and breaks it down into sub-tasks.

2. **Precise Navigation & Mobility:** The directive to “follow the black line” engages the rover’s core functions. A line sensor array and PID control algorithm ensure precise path tracking with the help of a Mecanum wheel base.

3. **Dynamic Visual Search & Scene Understanding:** The onboard camera scans the desk surface, providing real-time video for object detection and scene understanding.

4. **Decision-Making & Voice Response:** Upon identifying the blue cube, the LLM synthesizes the information and generates a natural-language response, spoken aloud through the system’s speaker.

This seamless integration of perception, reasoning, and action highlights the TurboPi’s potential as a versatile platform for embodied AI—bringing intelligence into physical interaction with the real world. It paves the way for developers to create interactive devices that go beyond cloud-based AI and into tangible, programmable devices.

Supported by a rich array of learning resources, TurboPi Tutorials guide users through setting up API access, handling speech processing, and scripting Python to orchestrate sensor data, AI services, and motors. The open-source codebase provides transparency for developers to understand and customize their integrations.

The integration of multimodal Large Language Models with the sensor-rich TurboPi hardware presents a unique opportunity to explore the next frontier of robotics. With the TurboPi platform, developers, students, and hobbyists can prototype robots capable of listening, seeing, reasoning, and acting in unison on an accessible and customizable Raspberry Pi-based platform.